Streamdal Use Cases

Streamdal gives you the ability to embed data-handling pipelines directly into

your application code. This means that once an application has the Streamdal

.process() wrapped around the code where data reads or writes, data will go

through any checks (rules and pipelines) you define

and attach to this code.

So, there are wide varieties of use cases for Streamdal!

Below is a curated list of use cases based on current implementations we have seen, and battle-testing we’ve done with our design partners and contributors.

Data Privacy

Code-Native Data PrivacyThere are a lot of tools for data privacy, and they generally fall into two buckets:

- Tools that scan/monitor your databases/data warehouses in batches for discovery, cataloging, and/or anomalies

- Tools like SIEMs and log ingestors

Streamdal isn’t a replacement for those tools. Streamdal introduces a simplified method to handle data privacy from where data originates: your application code. By embedding the data pipeline engine into your code (via SDK), you’ll be solving for the capability gaps in contemporary data privacy tooling, or mitigating some of the drawbacks that those tools introduce, like how they:

- Add more complexity to an organization’s infrastructure

- Are generating more tickets than they are solving

- Are reactive and prevent nothing

- Are incredibly expensive, and are starting to introduce unforeseen costs

- Aren’t helping your mechanisms of action, or hampering application performance and/or the development process

See how Streamdal lines up vs. contemporary Data Privacy Tools.

PII Handling

Code-Native PII HandlingSimilar to the Data Privacy use case, there are many methods, tools, and platforms that can be used to handle PII. Those usually fall into the same buckets of functionality, but might also offer capabilities like:

- Data Loss Prevention (DLP), by integrating into all of your organization’s data-handling environments (E-mail, Google Docs, etc)

- Multi-tenant data segregation and/or encryption/tokenization

Streamdal isn’t a replacement for those tools either. However, Streamdal is scoped to work within your bespoke applications or services (via SDK), and can govern how they handle PII data at runtime.

You would use Streamdal to cover the gaps in handling PII, such as

- Ensuring authorized applications never handle PII incorrectly, or inadvertently expose PII to unauthorized applications or storage after read

- Detecting PII explicitly or dynamically in a payload, and applying rules (like transformations, obfuscations, notifications, etc)

- Detecting for tokenization or encryption usage, and non-compliant data from flowing

The the data pipelines you can embed into your code with Streamdal allows a wide range of PII checks you can enforce at runtime.

See how Streamdal lines up vs. contemporary PII Handling Tools.

PII Discovery

While the Streamdal platform excels at in-flight data identification and transformation, it is also possible to use the platform to create a robust data discovery mechanism.

For example, you could use Streamdal to discover all data that is being written or read by your applications that contains sensitive info such as PII.

To do so, you would do something like this:

- Deploy the Streamdal platform (server, console)

- Instrument your services to instantiate a Streamdal client that is configured in async mode and sampling (for example, 10 req/s).

- Enabling async would remove the SDK client from the critical path in your application.

- Enabling sampling would ensure that your application won’t use more resources under an unexpected, heavy load.

- Create a “discovery” pipeline that contains two steps:

- Step 1:

DetectivewithPII_KEYWORDdetective type- Configure

PII_KEYWORDto use theAccuracymode - Set

On Falsecondition toAbort All Pipelines

- Configure

- Step 2:

HTTP Requestthat is configured to send aPOSTrequest to a service on your end that will collect discovery data.- Make sure to check

Use Previous Step Resultsso that theHTTP Requestsends foundPIIdata from the previous step.

- Make sure to check

- Step 1:

- Create a service that will be able to receive “discovery” data that it receives from services instrumented with the Streamdal SDK. Make sure the “discovery” service is reachable by the “SDK-enabled” services.

HTTP Requeststep set to “Use Previous Step Result”, will send the following body to your “discovery” service:

{ "detective_result": { "matches": [ { "type": "DETECTIVE_TYPE_PII_KEYWORD", "path": "email", "value": null, "pii_type": "Person" }, { "type": "DETECTIVE_TYPE_PII_KEYWORD", "path": "password", "value": null, "pii_type": "Credentials" }, { "type": "DETECTIVE_TYPE_PII_KEYWORD", "path": "birthdate", "value": null, "pii_type": "Person" }, { "type": "DETECTIVE_TYPE_PII_KEYWORD", "path": "country", "value": null, "pii_type": "Address" } ] }, "audience": { "service_name": "signup-service", "component_name": "postgresql", "operation_type": "OPERATION_TYPE_PRODUCER", "operation_name": "verifier" } } - Assign the “discovery” pipeline to the instrumented service.

Reducing Costs

Code-Native Cost SavingsA lot of monitoring, tracing, data analytics, or data tools in general revolve around logs or connecting to and scanning at-rest data. If your costs are ballooning around processing data or logging, streamdal can help reduce these costs.

Streamdal is embedded into your application code (via SDK), and supplies data pipelines to where your application logs, creates, reads, writes, or transmits data. Because of this method, and Streamdal’s utilization of Wasm to execute your rules/pipelines at near-native speeds, you can greatly reduce costs without any extra infrastructure, network hops, or performance overhead.

You could use Streamdal to reduce costs by:

- Reducing log size

- You can attach pipelines with rules to

TRUNCATE_VALUEanywhere in your payloads, and explicitly by length or percentage

- You can attach pipelines with rules to

- Extracting values

- You can extract values anywhere in the payload (e.x. object.field) and

drop all others.

EXTRACTalso gives you the option to flatten the resulting object as well

- You can extract values anywhere in the payload (e.x. object.field) and

drop all others.

- Replacing, obfuscating, masking, or deleting values

- A lot of costs can be saved by executing data transformations on the application side, which gives you the option to greatly simplify (or eliminate entirely) your usage of extraneous data handling tools.

With Streamdal embedded into your code, you can ensure to keep data costs as low as possible by handling these transformations before data leaves your applications.

Check out all of the different transformation and detective types available for data rules and pipelines.

Simplifying Data Infrastructure

Code-Native Infrastructure SimplificationStreamdal helps keep the technology and data landscape less complex and spread out.

You can use Streamdal to reduce the amount of data infrastructure used for handling data, whether it’s for Data Privacy, PII handling, Data Quality, or by simply having a single place to handle rules and govern all of these processes (i.e. Data Operations).

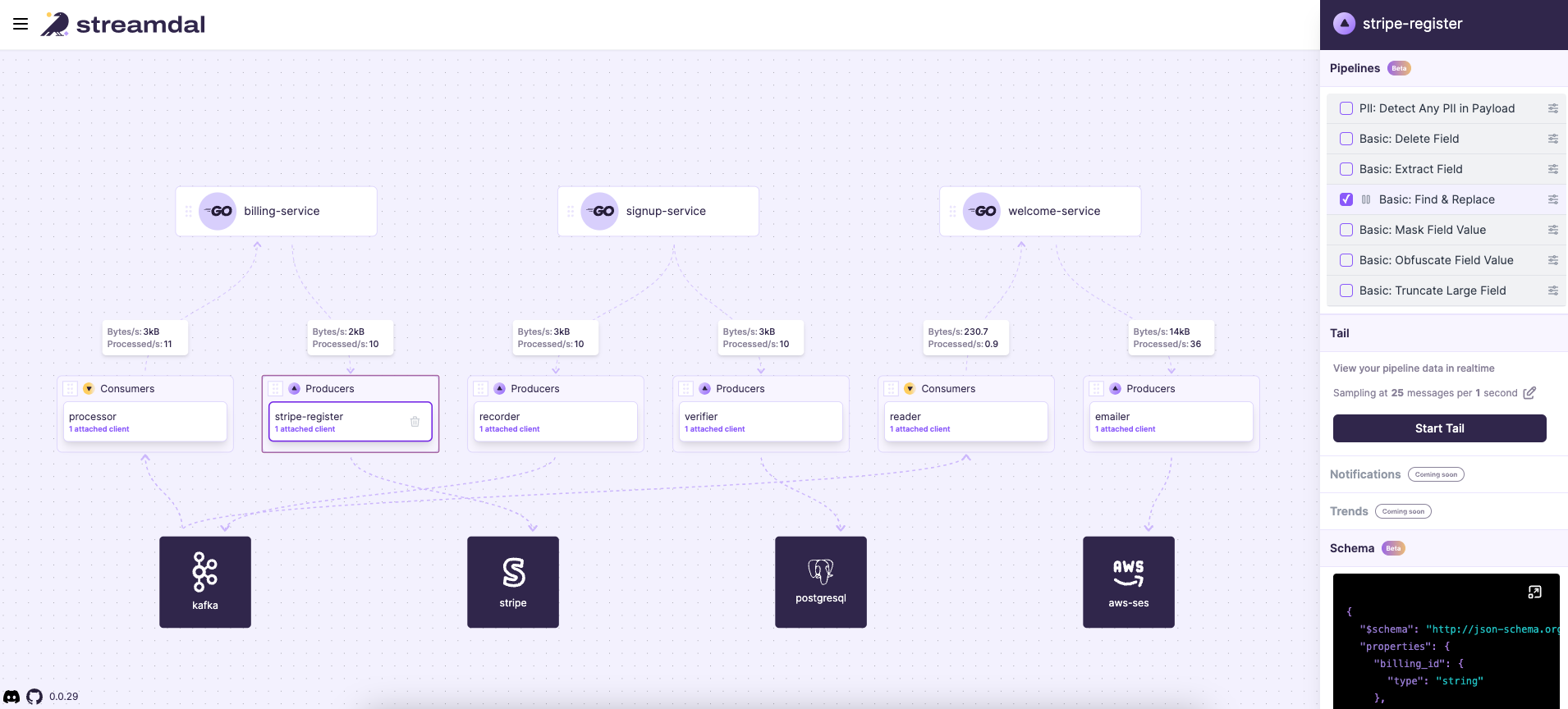

The Console UI provides a single visualization for the flow of data across your systems, along with providing a place to define and attach rules/pipelines to execute wherever data is being handled.

You can reduce the infrastructure data runs through, such as:

- Airflow

- dbt

- Flink

And much more.

There is potential to replace or bypass the need for an enumerating number of contemporary monitoring, ETL/ELT, data pipeline, and dashboard-esque tools commonly stood up for data infrastructure.

Take a closer look at the various Streamdal pipelines you can apply to data for a better idea of where you can simplify your data infrastructure.

Real-time Data Operations

Code-Native Data OperationsCoupled closely with the use case for simplifying data infrastructure, Streamdal can act as your single source of truth for your real-time data operations.

From the Console UI, you can get answers to all of the following questions and much more:

- What data is flowing through my applications right now?

- How is PII flowing through my systems?

- How are we governing our data, and ensuring data privacy?

- How can I get alerted any time an application would potentially violate data privacy, or cause downstream data issues?

- What is causing sudden spikes in log activity?

- Where can we begin reducing costs around data?

- What is producing the most amount of data?

- Where is broken data coming from, and how can I ensure it is always valid?

While Streamdal doesn’t operate on data at rest, it can ensure whenever data moves (i.e. a read or write occurs), it runs through the pipelines of checks, rules, or validations you put in place. Most issues can be solved without leaving the Console UI. You can define and apply data rules and apply them indefinitely, exponentially, or hot-swap as needed.

The live Streamdal demo is a simple example of what handling data operations on real-time data might look like.

Streamdal vs. Contemporary Solutions

| Features & Functionality | Contemporary Solutions | Streamdal |

|---|---|---|

| Free | ❌ | ✅ |

| Fully Open Source | ❌ | ✅ |

| Adds preventative measures for handling data | ❌ | ✅ |

| User control over the real-time movement of data | ❌ | ✅ |

| Creates and enforces geographical barriers for real-time data | ❌ | ✅ |

| Run functions and checks within data handling applications | ❌ | ✅ |

| Requires network hops | ✅ | ❌ |

| Adds extra infrastructure or data sprawl | ✅ | ❌ |

| Vendor ingests or reads your data | ✅ | ❌ |

Other Useful Aspects

Code-Native EverythingStreamdal is a tool designed to give you a performant, unintrusive mechanism to prevent data issues and simplify the processes around handling or moving data. This is accomplished through two cornerstone functionalities:

- Embedding the mechanism directly into application code (via SDK)

- Adding mechanisms for viewing real-time data (Tail CLI and UI), and applying continuous, reusable remedies for real-time data (Pipelines)

The first of those functionalities is what allows users to apply preventative rules to the earliest stage of data handling in applications: at runtime. This is why it is so popular for Data Privacy and PII Handling.

However, there are more benefits you can derive from Streamdal outside of this. You can:

- Detect, alert, notify, or transform any field in data

- Validate schemas

- Validate data

- Detect for empty values and transform or fill them as necessary

Or, you could do something more specific, such as:

Performance Improvements

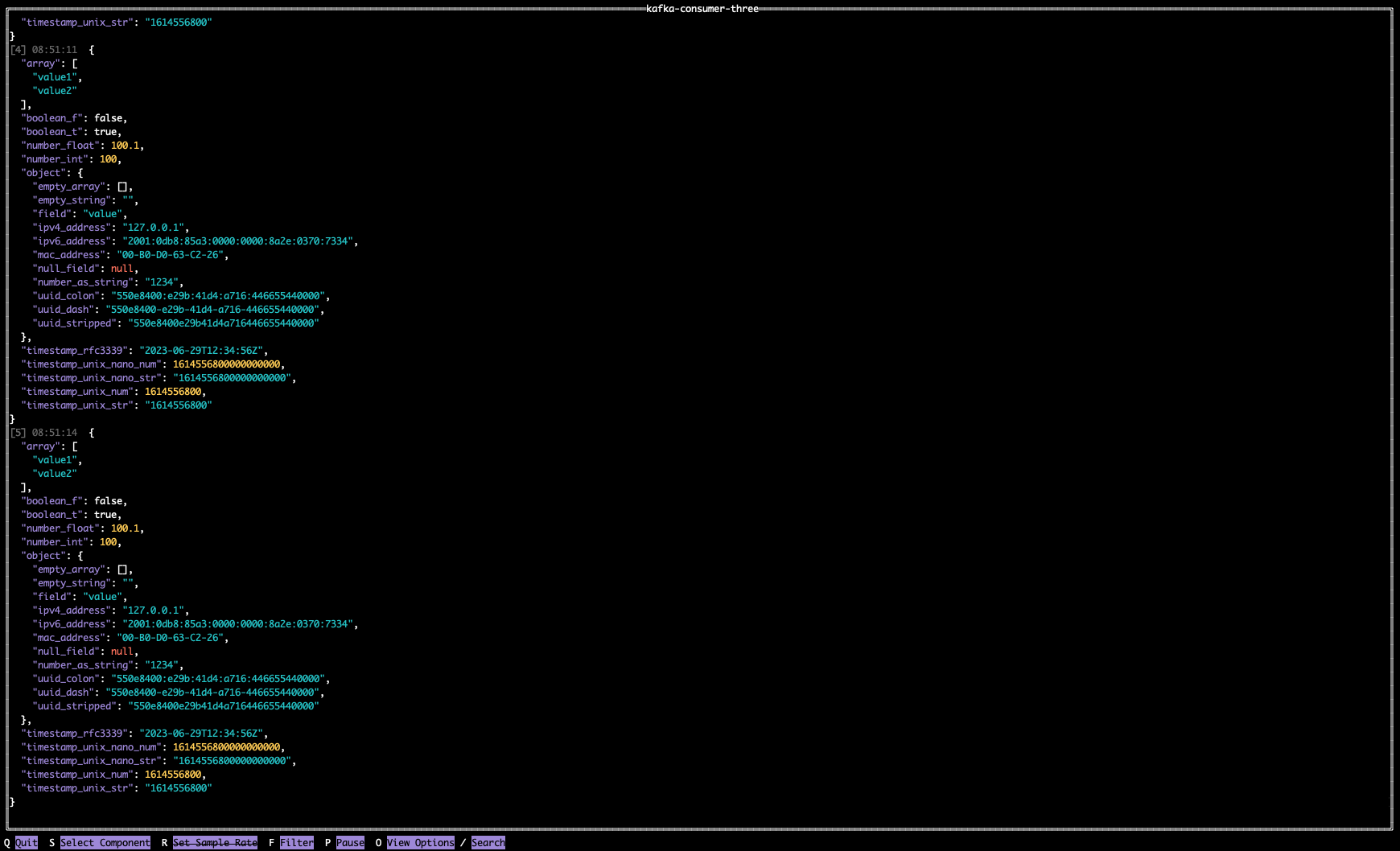

Streamdal provides real-time metrics, so you can

monitor the effectiveness and test throughput capabilities of your

applications. You can also view real-time data with Tail.

It’s like a tail -f, but for data flowing through your services or

applications.

Tail is also accessible via CLI.

In the future, we plan to add auto-encoding and decoding of JSON -> Protobuf. This means you can get the benefits of protobuf, without the time-consuming process of everyone having to become a protobuf expert.

Check back on our roadmap for updates on performance improvement features.

Data Flow Visualization

Streamdal can be used to generate a visual flow of data throughout your systems. This visualization provides a variety of relevant, real-time information to maximize insights. You can also view:

- Real-time data being processed

- Metrics and throughput information

- Schemas

- Data privacy and governance enforcement, along with any validation, rulesets, or processing you’re applying to data

- Where alerts and notifications are sent

- Scale of attached clients (horizontal and vertical scaling of your applications)

- Trends in data flow over time (this is coming soon!)

The Figma-like visualization that sits in the Console UI is what we call the Data Graph:

While the Console and Data Graph is an actionable dashboard, you don’t have to do anything to maintain it’s freshness or accuracy. It’s real-time, and dynamically updated as you embed Streamdal into your applications.

Take a look at the deployment and instrumentation to understand more about how the Data Graph is rendered.

Data Observability

Sometimes you just need to see data or the structure of the data you’re working with. Streamdal is an ideal choice for data observability because you can view:

- Your data in real-time as it is processed in your applications. Before it writes or as it reads data

- Schemas

- Side-by-side view of before and after data transformations

If you simply want to view data without going through the UI, you can use the CLI:

Read more on the Tail feature for details on how to tap into real-time data.

Data Quality

Streamdal provides a simple way to collaborate on, define, and enforce data quality needs in a way that doesn’t hamstring the development or CI/CD process.

For example, let’s say you have a field in data that must never be null or

empty. You might define a pipeline that:

- Uses detective types like

IS_EMPTY,HAS_FIELD, orIS_TYPEto watch fornullor empty fields- Optionally, you can select “notify” for alerts

- Once this is detected, it moves to the next step you might want with a

transform type like

REPLACE_VALUE, and add a placeholder value

The above pipeline example is somewhat rudimentary, as you could also simply

have SCHEMA_VALIDATION steps. But, the high-level ideas are:

- With Streamdal, you’re applying continuous checks on real-time data via data pipelines shipped directly into your application code. The pipelines are reusable, and there are no limits to the amount of pipelines you can apply to data-handling applications

- You’re applying preventive mechanisms that ensure no data issues are caused to downstream systems by upstream producers.

Read more on about Pipelines and the enumerating ways they can be constructed for data quality.

Data Enrichment and Data Categorization

This is commonly associated with Data Privacy, PII handling, or general Data Operations needs, but you can enrich and categorize real-time data with Streamdal.

On any step in the pipeline process, you can optionally enrich or categorize

your data by adding key/value metadata, which is embedded into data at the

earliest possible state of data.

Read more about Pipeline Flow Control to understand how you can enrich and categorize data, and pass metadata.